anthropical

Though it may not capture as many headline as its competition from Google , Microsoft , and OpenAI do , Anthropic ’s Claude is no less powerful than its frontier model peer .

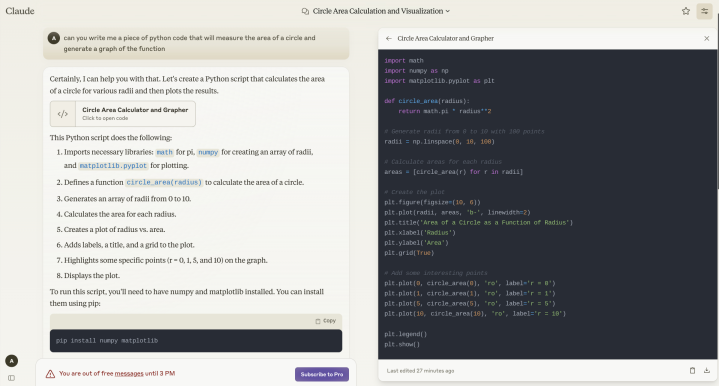

In fact , the latest variant , Claude 3.7 Sonnet , has evidence more than a match for Gemini and ChatGPT across a number of industry benchmarks . In this pathfinder , you ’ll check what Claude is , what it can do best , and how you’re able to get the most out of using this quiet capable chatbot .

Anthropic

What is Claude?

Like Gemini , Copilot , and ChatGPT , Claude is a large language mannequin ( LLM ) that bank on algorithms to predict the next word in a conviction based on its enormous corpus of training material .

Claude differs from other modeling in that it is train and conditioned to bind to a 73 - point “ Constitutional AI ” framework design to render the AI ’s response both helpful and harmless . Claude is first trained through a supervised erudition method wherein the model will sire a response to a given prompt , then evaluate how closely in line with its “ constitution ” that response falls , and finally , revise its subsequent responses . Then , rather than trust of humans for the strengthener learning phase angle , Anthropic uses that AI rating dataset to train a preference model that helps fine - line Claude to consistently output responses that conform to its constitution ’s principle .

In February 2025 , Anthropic updated Claude ’s inbuilt system of rules with new classifiers designed to importantly improve the model ’s United States Department of Defense against oecumenical jailbreak techniques . Constitutional classifier are , “ input and output classifier trained on synthetically beget data that permeate the consuming majority of jailbreaks with minimum over - refusal and without incurring a with child compute overhead , according tothe announcement stake . The ship’s company is so confident of its classifiers , which have already hold up more than 3,000 60 minutes of red hat examination , that Anthropic is offering hackers as much as $ 20,000 to successfully jailbreak its AI .

Anthropic released the first iteration of Claude in March 2023 and quickly updated it to Claude 2 four calendar month later in July 2023 . These early versions were rather limited in their coding , math , and reasoning capableness . That modify with the release of the Claude 3.0 family — Haiku , Sonnet , and Opus — in March 2024 . Opus , the largest of the three models , handily perplex out GPT-3.5 , GPT-4 and Gemini 1.0 ( all of which were the nation of the art at that metre ) .

“ For the vast majority of workload , Sonnet is 2x degenerate than Claude 2 and Claude 2.1 with high levels of news , ” Anthropic write inthe Claude 3 announcement post . “ It excels at tasks demand rapid responses , like knowledge retrieval or sales automation . ”

Opus ’ perspective atop the pile would be suddenly - lived . In June 2024 , Anthropic debut Claude 3.5 , an even more potent model . Claude 3.5 Sonnet “ operate at twice the focal ratio of Claude 3 Opus,”Anthropic wrote at the metre , make it ideal “ for complex tasks such as context - sensitive customer support and orchestrating multistep workflow . ” It also more often than not outdo GPT-4o , Gemini 1.5 , and Meta ’s Llama-400B model .

In October , Anthropic free a more or less improved version of 3.5 Sonnet , dubbed Claude 3.5 Sonnet ( new ) , alongside the release of the new Claude 3.5 Haiku model . Haiku is a smaller , and more lightweight version of the model that ’s plan to perform simple and repetitive tasks more expeditiously . In addition to the web and mobile apps , Claude is alsoavailable as a desktop appfor both Mac and Windows .

In February , 2025 , Anthropic releasedClaude 3.7 Sonnet , its heir to Claude 3.5 . The new Claude 3.7 has been design as a “ intercrossed logical thinking ” example that can retrovert , grant to the party ’s announcement post , “ near - heartbeat responses or extended , stride - by - footprint thinking that is made seeable to the exploiter , ” in line with what DeepSeek ’s R1 and OpenAI ’s o1 and o3 mannequin can generate .

“ Just as humans use a single genius for both quick response and deep reflexion , we consider abstract thought should be an integrated capability of frontier models rather than a separate model completely , ” the Anthropic team wrote . “ This merged approaching also creates a more unlined experience for user . ”

Claude 3.7 is available to all Anthropic exploiter , though the model ’s extended logical thinking capability is only accessible if you have a make up subscription to the company ’s platform . Even the free interpretation , however , offers safe performance than Claude 3.5 .

What can Claude do?

While ChatGPT and Gemini are plan to be able to do questions across a broad spectrum of topics , anddo so via vocalism fundamental interaction , Claude instead excel at encrypt , math , and complex reasoning tasks . Anthropic bills the previous versions of Claude as its “ strong imaginativeness manikin yet . ” And it suppose 3.5 Sonnet can perform a potpourri of vision - found chore , such as decrypt text from foggy photos or interpret graph and other visuals .

Claude can also interact like a shot with other screen background apps by emulating a human exploiter ’s keystrokes , mouse movements , and cursor click through the “ Computer Use ” API . “ We take Claude to see what ’s befall on a screen and then employ the software tool uncommitted to express out tasks , ” Anthropicwrote in a web log post . “ When a developer tasks Claude with using a art object of computer package and give it the necessary approach , Claude looks at screenshots of what ’s seeable to the substance abuser , then counts how many pixels vertically or horizontally it require to move a cursor in lodge to chatter in the correct space . ”

In January 2025 , Anthropic announced that it is partner with ride - hailing app Lyft to incorporate Claude ’s capabilities into , “ client - first , AI - power Lyft production , ” like the company ’s AI helpline agent . particular are still scarce but the collaborationism will concentre primarily on deploy AI applications that “ heighten the rideshare experience , ” enquiry testing on raw products and capabilities , and Anthropic leave “ specialized training ” to Lyft ’s engineering team .

“ software package technology has undergo a seismic shift with the intro of GenAI technologies . Gone are the solar day when man were preponderantly writing codification , ” Jason Vogrinec , Executive Vice President , Platforms at Lyft , said in the proclamation post . “ With the hope of LLMs , particularly leading poser for slang like Claude , and agentic AI , we ’re working to revolutionize our engineering organisation to more effectively build biz changing products for our customer . ”

Claude has already found its elbow room into at least one of Lyft ’s organization — the customer serve helpline , specifically . fit in to Lyft , its “ client precaution AI assistant , ” which is powered by Claude via Amazon Bedrock , lowered client religious service resolution meter by 87 % , “ handling thousands of day-to-day client enquiry while seamlessly transition complex cases to human specializer when needed . ” There ’s no word on whether that reduction is due to the AI in reality being helpful or due to the great unwashed either now demanding to speak to a human service rep .

This cross off the second meter that Lyft has attempt to automate its client overhaul operation . The party had previouslyattempted to do so in 2018using non - productive AI models , but to middling burden .

In February , 2025 , Abnthropic released its new agentic AI , dubbedClaude Code , as a limited inquiry prevue . As with Anthropic ’s early Computer Use API and other agentic AI system , such as OpenAI ’s Operator or Microsoft Copilot ’s Actions , Claude Code is up to of take autonomous action on its exploiter ’s behalf .

How to sign up for Claude

you could test Claude for yourself through the Anthropic web site , as well as the ClaudeAndroidandiOSapps . It is complimentary to apply , supports image and papers uploads , and tender access to the Claude 3.7 Sonnet ( unexampled ) model . The company alsooffers a $ 20 - a - calendar month Pro planthat grants higher exercise limits , access to Claude 3 Opus and Haiku , Claude 3.7 ’s extended reasoning ability , and the Projects feature , which fine - melodic line the AI on a specific set of documents or files . To sign up up , dawn on your user name in the left - hand navigation pane , then select Upgrade Plan .

How Claude compares to the competition

Claude 3.7 Sonnetboasts a routine of advantage over its principal rival , ChatGPT . For case , Claude offers users a much big setting windowpane , enabling users to craft more nuanced and elaborated command prompt . Claude ’s Constitutional AI computer architecture means that it is tuned to cater exact reply , rather than creative unity . The chatbot can also competently summarize inquiry papers , give reputation base on uploaded data , and break down complex maths and science questions into easy follow footmark - by - step didactics .

While it may fight to write you a verse form , it excels at bring forth confirmable and reproducible response , especially with its newly introduced psychoanalysis tool . The company describesit as a “ built - in code sandpit where Claude can do complex math , analyze data , and iterate on different mind before sharing an answer . ” “ The ability to action info and discharge code means you get more accurate answers . ”

On the other bridge player , there is plenty that other chatbots can do that Claude ca n’t . For example , Claude does not declare oneself an combining weight to OpenAI ’s Advanced Voice Mode , so you ’ll have to dumbfound with your textbook and icon prompts . The AI is also incompetent of generating range of a function , like ChatGPT does with Dall - E 3 .

Claude’s controversies

Claude ’s ontogenesis has not been without self - inflict drama . A report from Proof News in Julycredibly accuse Anthropic ( along with Nvidia , Apple , and Salesforce ) of using a dataset of 173,536 YouTube picture subtitles scrape from more than 48,000 channels , admit MrBeast , Marquees Brownlee , and Pew Die Pie , to train their tumid language models .

The AI chatbot has also run into some issues with its recently loose Computer Use lineament . turn out that Claude takes its operating instructions a bit too literally , make the AI susceptible to actuate injectant attacks , as certify in a demonstration by X userJohann Rehbergerin October .